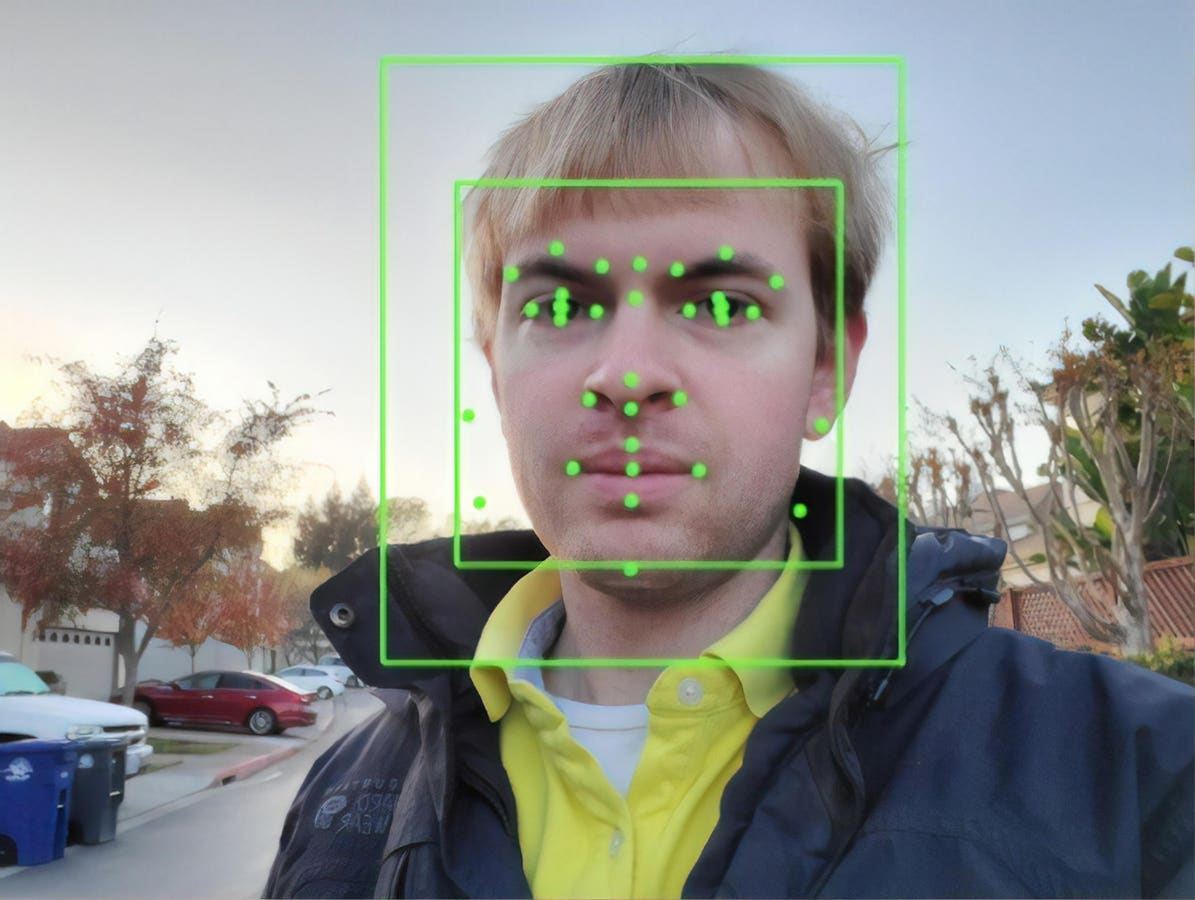

Output of an Synthetic Intelligence system from Google Imaginative and prescient, performing Facial Recognition on a … [+]

As we enter 2025, it is clear the AI trade is getting ready to explosive progress—but in addition a second of fact. The race to dominate AI is heating up, pushed by geopolitical rivalries, billions in government funding, and a relentless push for the following breakthrough. With all this exponential momentum, now we have to be prudent: The abuse of AI expertise in a very centralized method might result in its downfall, and the combination with blockchain expertise and crypto generally is a reliable response to that.

The Nice AI Divide: The Race for International Affect within the Digital Age

The AI race is not only a company endeavor; it is a matter of nationwide technique. America, recognizing AI’s strategic significance, has already imposed strict export controls on superior applied sciences to international locations like China. This has pressured China to double down on home innovation, with state-backed investments pouring into companies like Huawei to develop homegrown alternatives to NVIDIA’s AI chipsets.

China will doubtless scale up its AI efforts in 2025, treating it as a cornerstone of nationwide competitiveness. Equally, within the U.S., the AI trade will see a surge in personal capital, federal funding, and company R&D initiatives. AI can even play a central position in different key sectors like electrical automobiles (EVs), protection expertise, biotechnology, aerospace, and aviation, that are already caught within the geopolitical crossfire of tariffs and commerce wars.

This escalating competitors could result in breathtaking developments in AI capabilities. Nonetheless, it should additionally create situations ripe for an unsustainable bubble.

The Bubble of 2025: A Dot-Com Déjà Vu

Parallels to the late Nineties dot-com bubble are on the horizon. A flood of capital and overambitious guarantees are setting the stage for overinvestment and inevitable failures. As new AI startups emerge and established firms pivot to capitalize on the development, we’ll doubtless see a wave of poorly conceived initiatives—unexpectedly designed applied sciences that fail to ship on their guarantees.

AI failures in 2025 will stem from an absence of deal with real-world applicability and long-term worth creation. Startups chasing the “subsequent massive factor” will usually prioritize hype over substance, resulting in a proliferation of instruments and platforms which might be both redundant or untrustworthy.

This lack of belief is especially troubling in consumer-facing AI merchandise. Contemplate the event of AI-powered private assistant robots for houses. Whereas the prospect of such expertise may really feel futuristic, many shoppers are hesitant to undertake it with out strong assurances about security, privateness, and moral safeguards.

The Spooky Actuality of Highly effective AI

The speedy deployment of AI with out significant reflection raises unsettling questions on its function. Do we would like AI to amplify shopper comfort and company earnings, or ought to it purpose to unravel humanity’s most urgent challenges?

With out belief, even probably the most highly effective AI functions will wrestle to realize widespread acceptance. The prospect of an clever robotic managing your family sounds intriguing—however would you really welcome one into your own home when you could not belief it together with your information or security?

Maybe no space calls for extra scrutiny than utilizing AI in army functions. The prospect of autonomous weapons, AI-driven surveillance, and battlefield decision-making methods raises moral, operational, and existential questions. When machines maintain the ability to determine issues of life and demise, the stakes transcend mere technical failure—they attain the center of human rights, worldwide regulation, and international safety.

Unintended penalties, akin to AI misidentifying targets or being weaponized in unexpected methods, might have catastrophic outcomes. Furthermore, the worldwide arms race to militarize AI could result in a destabilizing suggestions loop, the place nations really feel compelled to escalate their capabilities whatever the ethical implications.

To counter this, trade and authorities stakeholders should set up rigorous requirements and guardrails to manipulate army AI use. Transparency, accountability, and worldwide cooperation are crucial to stopping AI’s misuse in ways in which might endanger humanity itself.

A Name for Accountable AI Growth

Within the rush to innovate, the AI trade should pause to replicate on its function. Governments, firms, and builders should ask themselves: What’s the intent behind the expertise we’re constructing?

This isn’t a name to halt innovation however to make sure it is grounded in ethics, sustainability, and human-centric design. Regulators have a task in creating frameworks that encourage accountable innovation with out stifling creativity. Companies have to prioritize transparency and belief as a lot as velocity and scalability. Customers should demand that AI applied sciences align with their values and expectations.

The trade itself has a duty to deal with AI’s broader implications, notably in delicate areas akin to army functions, healthcare, and public coverage. By embedding moral concerns into the material of AI growth, we will mitigate dangers whereas unlocking its transformative potential.

How Blockchain and Decentralization Can Assist

One of the vital urgent challenges in AI is the “black field” dilemma, the place AI choices are opaque and tough to audit. Blockchain’s immutable and clear ledger offers a powerful solution by recording each stage of the AI lifecycle, from information assortment and coaching to deployment choices. This ensures that methods stay auditable and reliable.

By integrating blockchain into AI growth, we will guarantee transparency, implement moral pointers, and forestall monopolistic management—aligning the AI trade with the ideas of belief, decentralization, and human profit. We title this effort “decentralized AI”.

2025 shall be a pivotal yr for AI basically and decentralized AI. It’s going to carry groundbreaking developments, record-breaking investments, and, sadly, a slew of failures. But when the trade can use these challenges as a possibility to recalibrate, it’d emerge stronger, extra targeted, and extra aligned with humanity’s wants.

This can be a second for all of us—buyers, technologists, regulators, and shoppers—to assume deeply concerning the position AI ought to play in our lives. It isn’t nearly what we will construct; it is about why we construct it.

By fostering a tradition of purposeful innovation and moral duty, we will make sure that AI serves as a power for good, not simply in 2025 however for future generations.