Information supply

This work makes use of knowledge from the Nationwide Violent Dying Reporting System (NVDRS) dataset, masking 267,804 recorded suicide dying incidents from 2003 to 2020 throughout all 50 U.S. states, Puerto Rico, and the District of Columbia2. To entry the NVDRS dataset, researchers should meet sure eligibility necessities and take steps to make sure confidentiality and knowledge safety. Our analysis was authorized by the NVDRS Restricted Entry Database (RAD) proposal, which gave us the required permissions to entry the info and undertake the work described right here. We’ve got additionally obtained the approval from Weill Cornell Drugs’s Institutional Evaluate Board to undertake our examine 23-12026810-01, titled “Use AI/ML to Tackle the Disaster of Suicide”.

Every incident occasion is accompanied by two dying investigation notes, one from the Coroner or Medical Examiner (CME) perspective and the opposite from the Regulation Enforcement (LE) perspective. The NVDRS incorporates over 600 distinctive knowledge parts for every incident, together with the identification of suicide crises—precipitating occasions contributing to the incidence of suicides, that occurred inside 2 weeks earlier than a suicide dying4. Examples of suicide crises embrace Household Relationship, Bodily Well being, and Psychological Well being crises. Suicide crises are annotated based mostly on the content material of the CME and LE studies. The info annotator (i.e., abstractor) selects from an inventory of predefined crises and should code all recognized crises associated to every incident. If both the CME report or LE report signifies the presence of a disaster, the abstractor should acknowledge and document this disaster within the database4.

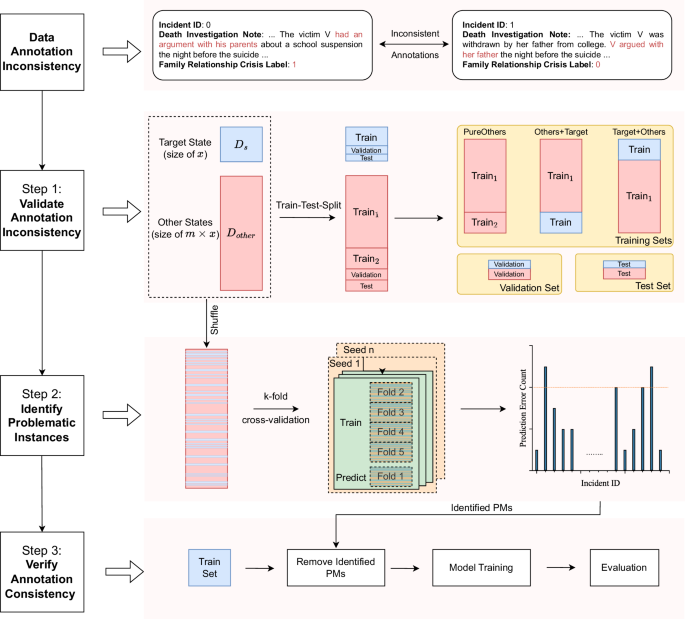

This examine has three duties: validating the inter-state annotation inconsistencies, figuring out particular knowledge situations that precipitated these inconsistencies, and verifying the advance in annotation consistencies after eradicating the recognized problematic knowledge situations. We current our strategies and conduct experiments utilizing three crises as illustrative examples: Household Relationship Disaster, Psychological Well being Disaster, and Bodily Well being Disaster (the state-wise statistics are detailed in Desk 1). These variables had been chosen for his or her increased prevalence of constructive situations within the NVDRS dataset and their poor classification scores, as demonstrated in prior work3. Definitions and examples of those three crises could be present in Supplementary Desk 1. We additionally addressed the constructive/destructive class imbalance within the NVDRS dataset by means of knowledge pre-processing. First, states with fewer than 10 constructive situations had been excluded to make sure satisfactory coaching knowledge. Subsequent, for every disaster, we created a balanced class distribution for each state by preserving the constructive situations intact and down-sampling the destructive situations, making certain an equal variety of each.

Validate annotation inconsistency

Impressed by Zeng et al.13, our method is grounded on the belief that if the label annotations for 2 datasets are constant, the fashions skilled individually on these datasets ought to exhibit equal predictive capabilities when utilized to one another. In sensible phrases, given a dataset D, if we practice a mannequin utilizing certainly one of its subsets to foretell the remaining portion, we anticipate observing a comparable analysis efficiency for each subsets.

Primarily based on this assumption, we first discover whether or not the label annotations within the goal state s are per these in all different states (Step 1 in Fig. 1). Particularly, given the annotated knowledge of goal state ({D}_{s}subset D) (the place Ds has a measurement of x), we pattern m unique subsets (every with a measurement of x) from the annotated knowledge of different states, denoted as Ddifferent. It’s value noting that ({D}_{s}cap {D}_{{different}}=)∅.

In Step 1, the scale of different states’ ({{Practice}}_{2}) set equals the scale of the goal state’s Practice set, making certain the three new coaching units are of the identical measurement. In Step 2, the okay-fold cross-validation process is repeated n occasions utilizing completely different random seeds. For every knowledge occasion, we recorded its prediction error counts, and ultimately recognized the problematic situations by thresholding the prediction error counts. PMs Potential Errors.

We then break up Ds and Ddifferent into coaching, validation, and take a look at units, respectively, with a ratio of 8:1:1, and assemble three completely different coaching units of the identical measurement: (1) PureOthers solely comprising samples from states aside from the goal state, (2) Others+Goal combining samples of different states with samples of the goal state, and (3) Goal+Others equally combining samples of the goal and samples of different states so as. For every coaching set, we skilled one transformer-based binary classifier, particularly utilizing the Bidirectional Encoder Representations from Transformers (BERT) mannequin24. Our aim is to match the classification performances between completely different coaching set combos. Particularly, we assess the inconsistencies between each state and different states within the annotations of Bodily Well being, Household Relationship, and Psychological Well being crises. To quantify the inconsistency, we compute the ΔF‒1’s on the take a look at units for each the goal state and different states. The inconsistency is measured because the distinction between the typical F-1 rating of fashions skilled utilizing combined coaching knowledge (Others+Goal and Goal+Others) and the F-1 rating of the mannequin skilled solely on knowledge from different states (PureOthers)

$$triangle F-1={Distinction}left({F-1}_{{Blended}}-{F-1}_{{PureOthers}}proper)$$

(1)

$${F-1}_{{Blended}}={Imply}left({F-1}_{{Others}+{Goal}},{F-1}_{{Goal}+{Others}}proper)$$

(2)

When incorporating the info from the goal state into coaching, a bigger constructive ΔF‒1 on the take a look at set of the goal state, accompanied by a smaller destructive ΔF‒1 on the take a look at set of different states, signifies a extra pronounced annotation inconsistency between the goal state and different states.

Establish problematic situations

To establish problematic knowledge situations within the goal state which could trigger the label inconsistencies between Ds and Ddifferent, we introduce a okay-fold cross-validation method (Step 2 in Fig. 1), impressed by the method in Wang et al.21. Our technique includes the next steps: we concatenate Ds and Ddifferent into one set, we randomly shuffle the info to make sure it’s well-mixed, and we divide the shuffled dataset into okay folds. Every distinctive fold is handled as a hold-out set, whereas the remaining okay-1 folds function the coaching set. We practice impartial suicide circumstance classifiers to establish problematic situations in every fold. All through this course of, every particular person knowledge pattern will get assigned to a particular fold the place it stays in the course of the cross-validation. This ensures that every knowledge pattern is utilized as soon as within the hold-out set and contributes to coaching the mannequin okay-1 occasions. For every knowledge pattern within the hold-out set, we examine the mannequin’s prediction to the bottom reality label and rely the variety of discrepancies.

To scale back randomness and improve the reliability of our findings, we repeat the okay-fold cross-validation process a number of occasions (i.e., n occasions), using completely different random knowledge partitions with distinct random seeds for every iteration. Then, for every knowledge occasion within the dataset, we get n estimations. We denote ci (0 ≤ ci≤n) because the variety of occasions a knowledge occasion xi is flagged for potential labeling errors throughout all n estimations. This rely ci signifies the boldness stage that xi might need labeling errors. Following this, we apply a thresholding mechanism to the counts of prediction errors for every knowledge pattern in Ds. This thresholding allows us to successfully establish and flag these knowledge situations that repeatedly present inconsistencies.

Confirm annotation consistency

As soon as we’ve recognized the problematic knowledge situations in Ds, our subsequent step is to judge whether or not these potential errors negatively impression the mannequin’s efficiency. To this finish, we systematically take away knowledge situations recognized as potential errors from the coaching dataset. By eradicating these situations and re-training the mannequin, we are able to assess the impression of those potential errors on the mannequin’s efficiency (Step 3 in Fig. 1). To measure the effectiveness of those removals, we introduce a random baseline for comparability, which randomly eliminated the identical variety of situations from the coaching set as these recognized as problematic.

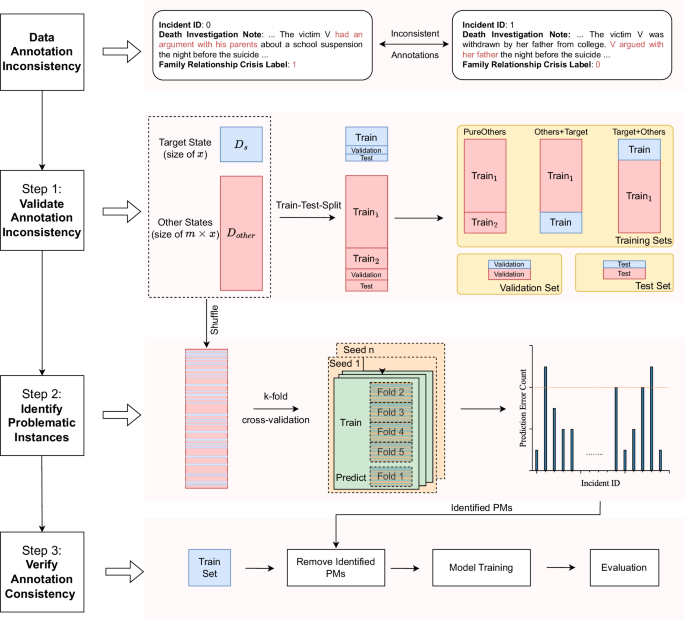

On a separate entrance, our efforts lengthen to guide correction of potential errors. After figuring out the potential errors, we recruited two annotators to manually establish and proper the precise mis-labelings. The precise mis-labelings are outlined as situations the place the 2 annotators establish floor reality annotations as incorrect. Two annotators obtained coaching on annotating labels following the NVDRS coding guide and resolved disagreements by means of dialogue. Our goal is to point out how constant annotations can improve the efficiency of classifiers. We make use of an incremental coaching paradigm to display this with 4 coaching units (Fig. 2): Others+Goal, comprising the info from different states and the unique knowledge from goal state; Others+CorrectedTarget, comprising the info from different states and the info from goal state after correction; Goal+Others, comprising the unique knowledge from the goal state and the info from different states, and CorrectedTarget+Others with the info from the goal state after correction and the info from different states.

For every coaching set, we progressively incorporate extra coaching samples in an incremental method utilizing a step measurement of T, to have a finer-grained view of how the corrected knowledge impression the mannequin efficiency. We practice the classification fashions and analyze the performances on the take a look at set. This course of helps validate the label consistency and the effectiveness of the corrected knowledge. We repeat all experiments n=5 occasions utilizing completely different random seeds.

Threat of bias evaluation

To higher perceive the chance of bias within the knowledge annotation, we employed logistic regression fashions to look at whether or not the connection between the suicide circumstances and demographic variables (i.e., race, age, and intercourse) has modified as we eliminated the recognized errors. NVDRS captures sufferer’s intercourse on the time of the incident in accordance with the Dying Certificates (DC). NVDRS follows U.S. Division of Well being and Human Companies (HHS) and Workplace of Administration and Finances (OMB) requirements for race/ethnicity categorization, which defines requirements for amassing and presenting knowledge on race and ethnicity for all Federal reporting. On this work, we adopted the HHS normal and used two classes for ethnicity (Hispanic or Latino, and Not Hispanic or Latino), and 5 classes for knowledge on race (American Indian or Alaska Native, Asian, Black or African American, Native Hawaiian or Different Pacific Islander, White) following the OMB and HHS requirements. One distinct logistic regression mannequin was developed for every suicide circumstance.

Particularly, the predictor variable represented the precise comparability group (i.e., Black, youth (age beneath 24), feminine) and was coded as 1. This was then contrasted with the reference group (i.e., white, grownup, male), coded as 0. We calculated the ORs for every comparability group utilizing the coefficient estimate affiliated with the predictor variable obtained from the corresponding logistic regression mannequin. The OR quantifies the probability of the precise circumstance occurring in a comparability group versus the reference group. The OR is computed as follows: ({OR}={e}^{{Coefficient; Estimate; for; the; Comparability; Group}}). ORs larger than 1 point out that the comparability group had increased circumstance charges than the reference group. We additional calculated a 95% confidence interval (CI) for every OR based mostly on the usual error of the coefficient estimate and the Z-score as follows:

$${Decrease; CI; Sure}={e}^{{Coefficient; Estimate}}-Ztimes {Normal; Error},$$

$${Greater; CI; Sure}={e}^{{Coefficient; Estimate}}+Ztimes {Normal; Error}$$

For 2 illustrative states (Ohio and Colorado), we computed the ORs of every circumstance variable in three units of annotations: the unique annotations from the NVDRS, the annotations after eradicating the errors recognized by our technique, and the annotations after randomly dropping the identical variety of situations because the recognized errors. By evaluating the ORs for a similar subgroup in several units of annotations, we are able to look at whether or not the connection between the suicide circumstances and demographic variables has modified.

Statistics and reproducibility

On this examine, we used BioBERT25 as our spine mannequin, recognized for its state-of-the-art efficiency, as demonstrated in our prior examine3. BioBERT works with sequences of as much as 512 tokens, producing 768-dimensional representations. About 5.1% of the NVDRS knowledge have enter size longer than 512 tokens, and so they had been truncated earlier than being fed to BioBERT. We framed suicide disaster detection as a textual content classification drawback by feeding concatenated CME and LE notes into BioBERT and coaching it to categorise whether or not a suicide disaster of curiosity is talked about within the textual content. We appended a completely linked layer on prime of BioBERT for classification.

For every disaster, states with fewer than 10 constructive situations had been excluded to make sure satisfactory coaching knowledge for validating annotation inconsistency. For experiments, we sampled m = 4 unique subsets from the annotated knowledge of different states. We carried out the experiments 5 occasions (n = 5) to strike a steadiness between attaining a dependable analysis and sustaining an inexpensive working time. It additionally ensures that each coaching and testing units comprise ample variations. Every iteration used a distinct random seed, and we reported the vary of micro F-1 scores together with the typical. For problematic occasion discovery, we selected okay = 5 for okay-fold cross-validation following widespread machine studying practices. The next frequency of discrepancies between prediction outcomes and floor reality labels will increase the chance of an incorrect floor reality label. We set the edge at 5, successfully minimizing the variety of false potential errors.

In our prior work, we utilized BERT-based fashions to categorise crises in NVDRS narratives3. We chosen Bodily Well being, Household Relationship and Psychological Well being crises on this examine because of their increased frequency of constructive situations and poor classification Space beneath the ROC Curve (AUC) scores in comparison with different crises (Desk 2 and Fig. 2 in Wang et al.3). Equally, we selected Ohio and Colorado as illustrative states for his or her increased frequency of constructive situations and superior state-wise classification F-1 scores in comparison with different states (Tables A2 and A3 in Wang et al.3).

Binary Cross Entropy Loss and Adam optimizer had been used throughout mannequin coaching. We skilled all of the fashions for 30 epochs, and mannequin choice was based mostly on their performances on validation units. The framework was applied utilizing PyTorch. We carried out our experiments utilizing an Intel Xeon 6226 R 16-core processor and Nvidia RTX A6000 GPUs.

Reporting abstract

Additional data on analysis design is obtainable within the Nature Portfolio Reporting Summary linked to this text.

![Google Play Store not showing available system app updates [U]](https://sensi-sl.org/wp-content/uploads/2024/10/google-play-store-material-you-336x220.jpeg)