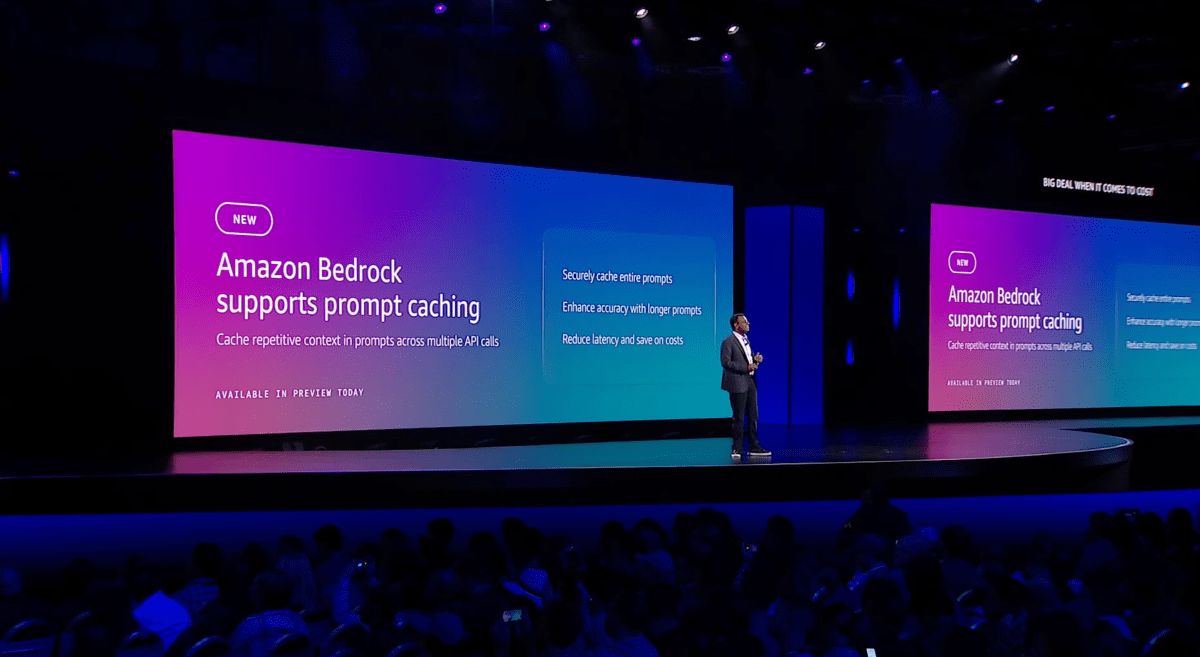

As companies transfer from making an attempt out generative AI in restricted prototypes to placing them into manufacturing, they’re turning into more and more value acutely aware. Utilizing giant language fashions isn’t low cost, in spite of everything. One solution to cut back price is to return to an previous idea: caching. One other is to route less complicated queries to smaller, extra cost-efficient fashions. At its re:invent convention in Las Vegas, AWS at present introduced each of those options for its Bedrock LLM internet hosting service.

Let’s discuss concerning the caching service first. “Say there’s a doc, and a number of individuals are asking questions on the identical doc. Each single time you’re paying,” Atul Deo, the director of product for Bedrock, advised me. “And these context home windows are getting longer and longer. For instance, with Nova, we’re going to have 300k [tokens of] context and a couple of million [tokens of] context. I feel by subsequent 12 months, it might even go a lot increased.”

Caching basically ensures that you just don’t should pay for the mannequin to do repetitive work and reprocess the identical (or considerably comparable) queries again and again. In line with AWS, this may cut back price by as much as 90% however one extra byproduct of that is additionally that the latency for getting a solution again from the mannequin is considerably decrease (AWS says by as much as 85%). Adobe, which examined immediate caching for a few of its generative AI purposes on Bedrock, noticed a 72% discount in response time.

The opposite main new function is clever immediate routing for Bedrock. With this, Bedrock can routinely route prompts to completely different fashions in the identical mannequin household to assist companies strike the correct stability between efficiency and price. The system routinely predicts (utilizing a small language mannequin) how every mannequin will carry out for a given question after which route the request accordingly.

“Typically, my question may very well be quite simple. Do I really want to ship that question to probably the most succesful mannequin, which is extraordinarily costly and gradual? In all probability not. So mainly, you need to create this notion of ‘Hey, at run time, based mostly on the incoming immediate, ship the correct question to the correct mannequin,’” Deo defined.

LLM routing isn’t a brand new idea, after all. Startups like Martian and various open supply initiatives additionally deal with this, however AWS would possible argue that what differentiates its providing is that the router can intelligently direct queries with out a variety of human enter. But it surely’s additionally restricted, in that it may well solely route queries to fashions in the identical mannequin household. In the long term, although, Deo advised me, the group plans to develop this technique and provides customers extra customizability.

Lastly, AWS can be launching a brand new market for Bedrock. The thought right here, Deo stated, is that whereas Amazon is partnering with most of the bigger mannequin suppliers, there at the moment are a whole lot of specialised fashions which will solely have a couple of devoted customers. Since these clients are asking the corporate to help these, AWS is launching a market for these fashions, the place the one main distinction is that customers must provision and handle the capability of their infrastructure themselves — one thing that Bedrock usually handles routinely. In whole, AWS will provide about 100 of those rising and specialised fashions, with extra to return.