- Jensen Huang made a bold prediction that computing power will increase a millionfold in a decade.

- So-called “scaling laws” observe that AI models get smarter with more computing power.

- Reports of slowdowns in AI model improvements have cast doubt on scaling laws.

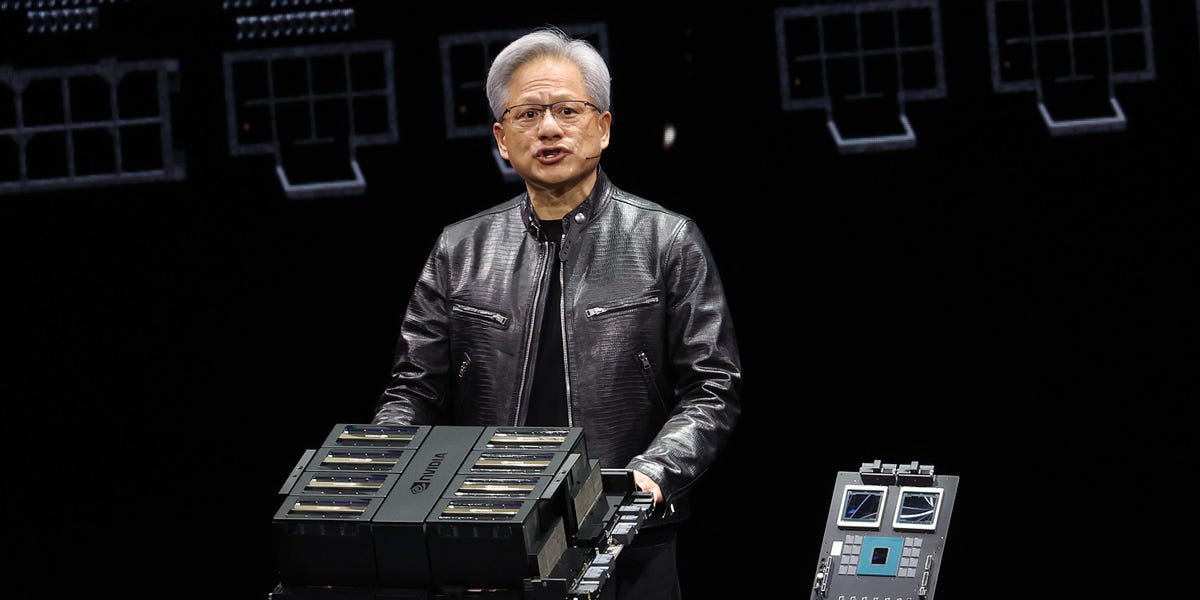

Nvidia CEO Jensen Huang has said that the computing power driving advances in generative AI is projected to increase by “a millionfold” over the next decade.

In a special address on Monday, the billionaire chip boss told an industry conference in Atlanta that computing power was seeing a “fourfold” increase annually. This growth rate would see a vital resource of the AI boom become vastly more powerful within the next 10 years.

Nvidia has surged to become the world’s most valuable company in the generative AI era. Companies across the tech sector have lined up to secure supplies of its specialist chips, known as GPUs, which offer the computing power needed to train smarter AI models.

Huang said that computing power was a key component of the so-called “scaling laws” that observe how AI large language models, or LLMs, see gains in performance as they grow in size and access more computing power and data.

According to the Nvidia CEO, “scaling laws have shown predictable improvements in AI model performance.”

However, fresh doubt has been cast over these scaling laws this month. Multiple reports have suggested that some of the top AI labs in Silicon Valley are struggling to eke out strong performance gains from their next-generation models.

OpenAI, for instance, is facing slower rates of improvement with its upcoming AI model, Orion, according to The Information. Meanwhile, Ilya Sutskever, former chief scientist at OpenAI, told Reuters that scaling the “pre-training” phase of AI, which involves data and computing power, had plateaued.

In his address, Huang appeared to address the recent uncertainty around scaling laws by saying that they “apply not only to LLM training” but to “inference” too, a process that describes how AI models respond to user queries and reason once they’ve been trained.

“Over the next decade, we will accelerate our road map to keep pace with training and inference scaling demands and to discover the next plateaus of intelligence,” he said.

Nvidia did not immediately respond to BI’s request for comment.