Web measurement may be understood because the act of gathering and analyzing knowledge associated to or demonstrative of how the web is performing. Much like how we measure modifications in our surroundings, financial system, and well being, we measure the web to see the way it’s working and to verify if any modifications are having the supposed impact. There are numerous methods to measure the web, simply as there are various methods to ask a pal how they really feel. However irrespective of how we select to do it, web measurement—like all data-centric disciplines—inevitably bumps into questions: what are we making an attempt to measure? What does it imply for the web to be working the best way we would like it to? And who’s we?

To generalize, the design of each web measurement methodology contains choices about the place within the community to check and what elements of habits are being noticed. For instance, to measure the “velocity” of a connection, a specific amount of knowledge may be despatched from Level A within the community to Level B, and the time elapsed to traverse between the 2 recorded. Easy within the summary, however, in apply, extra explicit choices are wanted to implement the measurement. The web, in spite of everything, is a stack filled with idiosyncratic protocols heaped onto each other, so which of those protocols are we being attentive to? On the community layer? The appliance layer? The transport or routing layer? The straightforward reply is “those that matter,” however which of them matter is dependent upon what sort of knowledge is being transferred and why.

This shortly brings us again to the query of what we are attempting to measure. Is the web for looking self-published web sites? E-commerce? Messaging? Video streaming? Sport enjoying? Social networks? Whereas the intuitive reply may be the entire above, standardizing round a specific definition to precisely symbolize that actuality has plagued web researchers for many years, as has the placement of the chosen vantage factors—the place is Level A and Level B? Which components of the community can we care about being properly linked? Are the pathways between customers and common content material suppliers similar to Netflix, Google, and Fb those that matter, or is it the pathways between these content material suppliers and the remainder of the web that we ought to be being attentive to? And what about the remainder of the web? How properly can we entry content material saved exterior the boundaries of platform dominance? Although not often phrased in such existential phrases, policymakers implicitly contemplate such questions when defining what web service suppliers (ISP) can and can’t do, and what their tasks are as public infrastructure.

In 2022, the Federal Communications Fee (FCC) introduced the Broadband Knowledge Assortment (BDC) program, a renewed effort to measure broadband availability and map the digital divide in the USA. Whereas reviews of the digital divide’s severity differ (the FCC has beforehand reported 14.6 million Individuals as being with out ample web entry, whereas others have estimated 42 million), the unfavourable results have lengthy been acknowledged as an pressing problem, which has solely deepened within the wake of the Covid-19 pandemic.

Because the Web Society reviews, “The [digital] divide isn’t clear lower, however irrespective of how you chop it, digital exclusion has many antagonistic impacts: lack of entry to healthcare and its outcomes, decreased financial alternatives and ‘the homework hole’, a phenomenon the place college students are given inequal entry to the tutorial advantages of the Web.”

The BDC was additionally created in assist of the Broadband Fairness Entry and Deployment Program (BEAD) program, a historic funding made potential by the Biden administration’s Infrastructure and Jobs Act, which allotted $65 billion {dollars} in direction of broadband infrastructure. The Nationwide Broadband Map, the BDC’s map of broadband availability within the US, was created to assist the BEAD program resolve learn how to allocate that funding to every state. These maps had been additionally designed as an replace to these created below the FCC’s 477 knowledge program, which has typically been understood as a failure as a result of its reliance on self-reported knowledge from web service suppliers. By the 477 program, ISPs weren’t solely allowed to basically regulate themselves, however they had been additionally capable of report a census block as “served” if just one family inside it was capable of obtain the minimal velocity of 25 Mbps obtain / 3 Mbps add. Broadband availability maps utilizing 477 knowledge subsequently diverged from the lived actuality of these it represented. The BDC program tried to proper these widely-documented wrongs by requiring knowledge to be measured on a location-by-location foundation, that means that every particular person home in a census block needed to be served for the block to be thought-about absolutely served. Nonetheless, the information continues to be self-reported, and what qualifies as a “broadband serviceable location” has been closely disputed.

Each variations of the FCC’s broadband maps are, like all maps, manifestations of a story. When utilizing them, we’re requested to imagine that the web is doing in addition to the ISPs say it’s. And although there are technically avenues for correcting the narrative they put forth, it’s telling that that FCC considers the ISP’s story first and the general public’s second. As a substitute of deciding how the web is doing for themselves, the FCC outsources the narrative to the businesses whose business pursuits incentivize a narrative of success.

Advocates and public curiosity teams, similar to Benefit at Michigan State College and the Institute of Native Self Reliance, have traditionally created their very own maps, utilizing open-source and/or crowd-sourced knowledge that refutes 477 knowledge and, they are saying, higher displays the fact of particular person households. The accuracy of those counter-mapping workout routines is commonly critiqued and referred to as into query, citing dangers of “overbuilding” infrastructure the place it isn’t wanted.

These well-established debates embody nuance and rigor, however in the end make the train of utilizing these knowledge, maps, and web measurement methodologies a irritating expertise and nearly irrelevant to what they supposedly symbolize, whether or not that’s customers, individuals, or customers (relying on who’s making the map). In spite of everything, the information is commonly solely meant to offer a scientific technique for recognizing what communities and neighborhood leaders already know to be true: these on the flawed facet of the digital divide are left behind. No matter which metrics consultants can agree upon to gather, an “correct” map is probably merely the one which represents the lived experiences of its topics.

An analogous connection to lived expertise is felt by these whose entry to the web is mediated by way of the charged politics and energy dynamics of their area. Within the first half of 2023 alone, Entry Now’s #keepiton coalition recorded a complete of eighty web shutdowns in fourteen completely different nations. Loosely understood to have originated within the 2011 Syrian rebellion, web shutdowns have turn into the device of selection for controlling protests and curbing freedom of expression in occasions of political unrest. Even though those that expertise these occasions don’t want graphs to grasp them, organizations similar to IODA, Censored Planet, Web Society’s Pulse, M-Lab, Google’s Transparency Report, and Kentik present knowledge, evaluation, and insights to doc web shutdowns in an try to create data-driven proof of their incidence. The latest battle in Gaza is the latest supply of harrowing anecdotes describing what it’s wish to be with out telecommunications throughout lively assaults. Whereas the phrases of the victims converse clearly and with out ambiguity, web measurement knowledge amplifies the voices of those that expertise web shutdowns by performing as a witness that may corroborate their claims.

Some assume {that a} elementary property of the web is that it’s globally linked, however nations, similar to Iran and China, who’ve constructed their very own “splinternets,” reject that assumption. Is the web nonetheless the web if it isn’t globally linked? Reconciling disparate solutions is the present job of governance and standardization organizations such because the Web Governance Discussion board, Worldwide Telecommunications Union, Web Company for Assigned Names and Numbers, and Web Engineering Process Pressure, all of whom have been grappling with the web’s affect on human rights and the methods wherein nations use the web to prepare themselves inside the international nationwide order. Inside these efforts, web measurement knowledge and analysis function as an artifact by way of which the invisible properties and dynamics of the web may be made seen.

Because the splinternet phenomenon suggests, the central challenges of right this moment’s web usually are not solely technical, however an overreliance on web measurement knowledge dangers characterizing it as such. There’s knowledge that’s able to chatting with the expertise of censorship and the digital divide, nevertheless it can’t reply why these environments happen and the way they grew to become regularized. Web consultants are fast to search for technical causes to clarify why the web isn’t working in varied methods, citing inefficiencies and misallocation of sources, however maybe the solutions are each less complicated and extra complicated. The systemic causes that 42 million Individuals would not have entry to the web are doubtless associated to the systemic causes that 26 million Individuals would not have healthcare, however these subtleties can’t be revealed by counting what number of seconds it takes for a packet to be despatched throughout the community. Simply as it might be inconclusive to check capitalism from a purely financial perspective, or city planning from a purely architectural lens, evaluation of the web ought to enrich and nourish a rigorous use of web measurement knowledge in different fields. Like interviewing a number of sources for a narrative, mapping the web with measurements from a number of disciplines and dimensions may help complement the gaps a technical-ruler can’t fill.

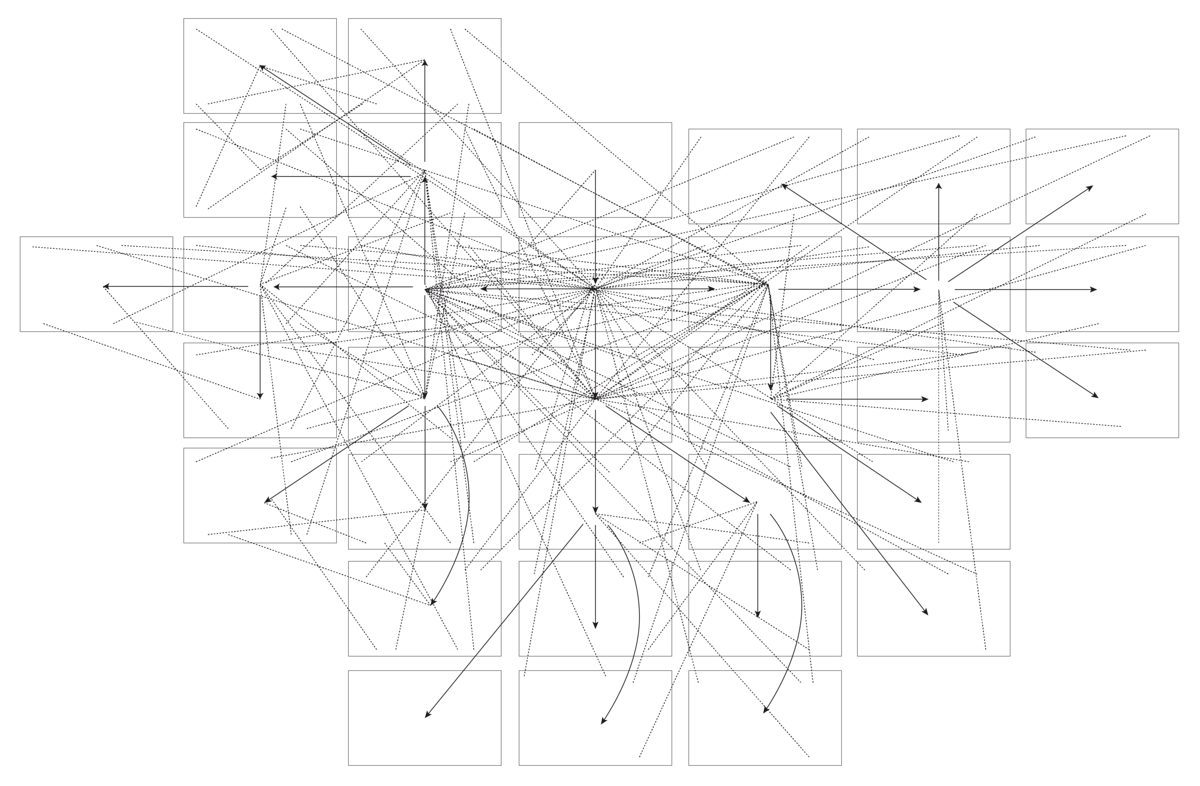

One instance of what this may appear to be is the work of the Geopolitics of the Datasphere (GEODE) Lab at College of Paris 8, which maps each web visitors patterns and the geopolitical dynamics by way of which the infrastructure and knowledge are mediated. Mapping workout routines similar to these are confronted with the difficult problem of representing the web visually. Webbed representations of the networks that the web is made up of (that are referred to as Autonomous Techniques) are generally represented in an amorphous house scaled in relation to their measurement and visitors patterns as a substitute of in relation to bodily geography. However every of those networks is made up of bodily infrastructure that exists inside geopolitical borders, whose underlying dynamics of battle and cooperation are consistently shifting. The problem in depicting each the geographic and community traits of the web speaks to its inherent dualities: bodily and digital, technical and political. The web, like all infrastructure, is embedded inside the political, social, and geographic contexts it occupies, and ought to be represented as such. However doing so may be troublesome and requires mapping a number of layers of disparate spatial configurations.

Web measurement knowledge is commonly portrayed by way of a static illustration, similar to a map, diagram, or graph, however the web is an lively phenomenon, consistently altering and reorienting itself from minute to minute, millisecond to millisecond. Because the web modifications, so does our potential to measure it constantly. Due to this fact, a longitudinal perspective is essential; any static illustration is just related for the snapshot in time it presents. Mounted photos of the web are extra accessible and are maybe appropriate for sure use instances, however a extra nuanced perspective is a dynamic one which depicts how the web has modified over time. For instance, within the case of documenting web shutdowns, incorporating timelines is essential in figuring out a community’s lower in connectivity as an interference occasion in opposition to the community’s standard habits. That stated, such a perspective is commonly troublesome to execute. Because the 2021 report by the Workshop on Overcoming Measurement Limitations to Web Analysis factors out, the web was not constructed to be measured with scientific precision. Thus, researchers typically resort to advert hoc strategies for approximating what varied metrics indicate, or the reverse: what behaviors may be represented by the varied metrics out there. These scaffolded strategies can break at any time (they usually do), thereby producing a change within the knowledge that’s because of the device reasonably than the topic that the device is measuring. Moreover, the supply of vantage factors may be risky because of the calls for of apparatus upkeep, modifications within the bodily infrastructure, or, within the case of lively measurements, shifts in person testing habits. The funding ecosystem for growing and sustaining the infrastructure essential to maintain measurement infrastructure out there can also be fragile, typically relying on the whims of company duty, the remits and crimson tape of presidency regulation, and fickle educational funding cycles.

These challenges however, measuring the web over time is the one manner its affect and habits may be understood in totality. What does it imply for the web to be working the best way we would like it to? And who’s we? Strive as we would to reply these questions in real-time, we would solely be capable to absolutely contemplate them inside the longer arc of historical past—historical past of each the web itself and of the programs that create and keep it. Web researchers typically give attention to the nuances of measurement on a micro scale, and for good purpose: the implementation particulars basically form the ensuing narrative. But when we will additionally contemplate the act of measuring the web as an archival apply, one much less centered on current efficiency and extra involved with the general arc of the web’s historical past and future trajectory, the main points of how we measure turn into second to making sure that we will do all of it. As in measuring a baby in a doorway in opposition to a hand-drawn yardstick, the ruler may be no matter you need, as long as it’s constant. In actual fact, after I inform individuals I measure the web for a dwelling, they sheepishly ask, “Effectively… how tall is it?” As a lot of a dad joke as that may be, it will get at how easy the premise of web measurement actually is: measure the web on daily basis and watch its top change over the time. How tall is the web? We don’t know, however we will be taught extra by asking.