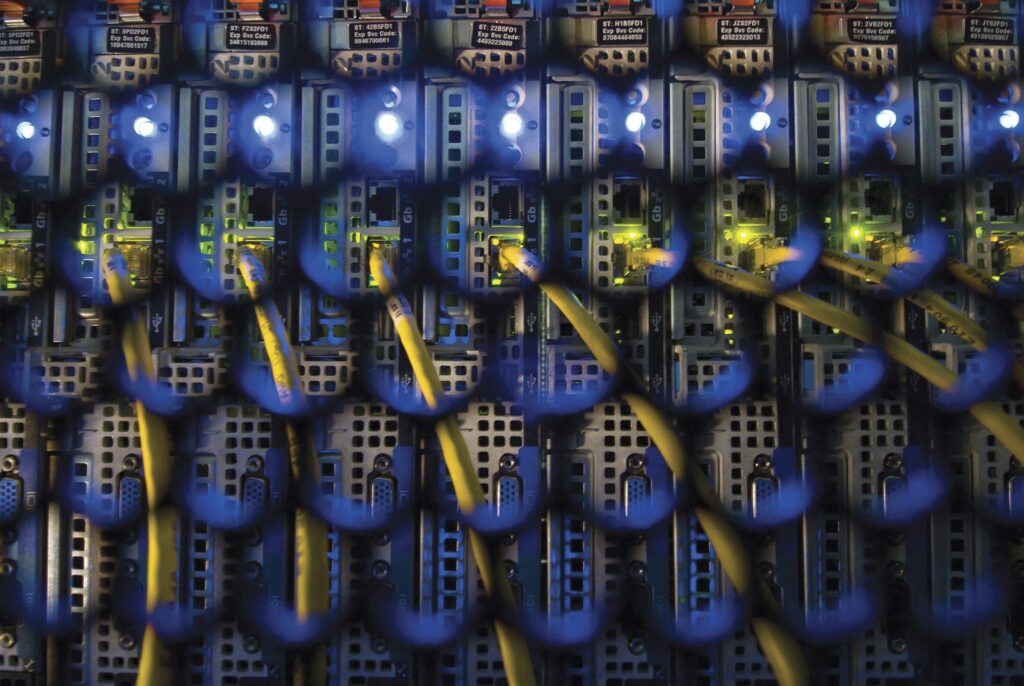

Inside a big constructing on UT’s J.J. Pickle Analysis Campus is a room the scale of a grocery retailer in a mid-sized city. In that room sits row after row of racks, 7 toes tall, every holding stacks of black central processing items. It’s tempting to jot down that these CPUs are quietly determining the secrets and techniques of the universe, however quiet they aren’t. All conversations on this room have to be shouted, because the whirring of 1000’s of followers makes it as loud as a sawmill. They’re on the job across the clock—no rest room breaks, no weekends, no holidays.

That is the Information Heart, the roaring coronary heart of the Texas Superior Computing Heart (TACC), which for 23 years has been on the forefront of high-powered computing and a jewel within the crown of The College of Texas at Austin.

“Supercomputer” is an off-the-cuff time period, nevertheless it’s “a very good Texas idea”: Our pc is greater than yours, muses Dan Stanzione, TACC’s govt director and affiliate vp for analysis at UT Austin for the previous decade. Virtually each supercomputer at the moment is constructed as an amalgam of normal servers, and it’s when servers are clustered collectively that they develop into supercomputers.

Supercomputing on the College dates to the late Nineteen Sixties, with mainframes on the principle campus. The middle that turned UT’s Oden Institute for Computational Science and Engineering, a giant contributor, was began in 1973 by Tinsley Oden. Within the mid-Eighties, Hans Mark, who had began the supercomputing program at NASA, turned UT System chancellor and took a eager curiosity in its development. However funding withered by the Nineties. All the pieces was rebooted, so to talk, when TACC was based in 2001, “with about 12 staffers and a hand-me-down supercomputer,” recollects Stanzione.

TACC operates three huge platforms, named Frontera, Stampede3, and Lonestar6, along with just a few experimental methods, many storage methods, and visualization {hardware}. In a given 12 months, Frontera, the most important pc, will work on about 100 giant initiatives. Different machines, corresponding to Stampede3, will assist a number of thousand smaller initiatives yearly.

Stanzione explains that computing pace has elevated by an element of 1,000 each 10 to 12 years for the reason that late Eighties. In 1988, a gigaFLOPS—1 billion floating-point operations per second—was achieved. The primary teraFLOPS was reached in 1998. A petaFLOPS (1 quadrillion) occurred in 2008. An exaFLOPS (1 quintillion) was reached in 2022. Subsequent up is the zetaFLOPS, which is perhaps reached in fewer than 10 years.

Stanzione says that over the past 70 to 80 years, this enhance may very well be attributed roughly in thirds: a 3rd physics, a 3rd structure (how the circuits and computer systems had been constructed), and a 3rd algorithms (how the software program was written). However these are actually altering as we bump up in opposition to the boundaries of physics. There was a time when making the transistor smaller would roughly double the efficiency, however now chips have develop into so small that the gates of the latest transistors are solely 10 atoms broad, which leads to extra leakage {of electrical} present. “They’re so small that the facility goes up and the efficiency and pace doesn’t essentially go up,” Stanzione says.

The common American house runs on about 1,200 watts. TACC, by comparability, sometimes requires about 6 megawatts, with a most capability of 9 megawatts. (A megawatt is 1 million watts, or 1,000 kilowatts.) Frontera, TACC’s greatest machine, alone makes use of as much as 4.5 megawatts, and the complete heart makes use of the equal of about 5,000 properties.

“The generators at Mansfield Dam, full energy, are 30 megawatt generators, so we might use a 3rd of the capability of the dam simply within the Information Heart now,” Stanzione says, with a mix of astonishment, swagger, and concern. “We’re going so as to add one other 15 megawatts of energy within the new knowledge heart that we’re constructing out as a result of the following machine shall be 10 megawatts.”

TACC’s new knowledge heart below building in Spherical Rock shall be powered fully by wind credit. Spherical Rock, the place I-35 crosses SH-45, has develop into a hotspot for knowledge facilities as a result of that’s the place fiber-optic strains, which run alongside I-35, enter a deregulated a part of Central Texas.

To mitigate the vitality utilized by these hungry machines, TACC makes use of wind credit to purchase a lot of its energy from the Metropolis of Austin. A hydrogen gas cell close by on the Pickle Campus additionally provides energy, so we “have a good mixture of renewables,” Stanzione says.

The very first thing you discover upon getting into the Information Heart is that it’s chilly. The quicker the machines, the extra energy they require, the extra cooling they require. TACC as soon as air-cooled the machines at 64.5 levels, however you may solely pull by a lot air earlier than you begin having “indoor hurricanes,” Stanzione says. “We had been having 30 mph wind speeds.”

Spreading the servers out would assist the warmth dissipate however would require longer cables between them. And although the servers are speaking on the pace of sunshine, as pulses shoot down a fiber-optic cable—“which is a few foot per nanosecond (one-billionth of a second) or, in good Texas-speak, 30 nanoseconds per first down,” he says—“if we unfold them out, then we’re taking these very costly computer systems and making them wait.” These billionths of a second add up.

In 2019, when its quickest pc was utilizing 60,000 watts per rack, TACC switched to liquid cooling, through which a coolant is piped over the face of the chip. Horizon, the following system, will use 140,000 watts per cupboard of GPUs (graphics processing items). Now they’ve switched to a different method of transferring warmth off the floor space of the processor: immersion cooling. It appears to be like like science fiction to submerge computer systems in liquid, however it’s not water. It’s mineral oil.

TACC requires 200 staffers, lots of whom work on consumer assist, software program, visualization, and AI. The core staff that staffs the Information Heart numbers 25, with at the least one particular person there across the clock to observe for issues. There are individuals who “flip wrenches,” changing elements and constructing out the {hardware}. Others thoughts the working methods and safety. Nonetheless others work on purposes, scheduling, and assist code tuning. Virtually half of the workers are PhD-level analysis scientists.

A lot of the workers is split into assist groups for initiatives inside a given area, such because the life sciences staff, as a result of they “simply communicate a distinct language,” Stanzione says. “I might have a physicist assist a chemist or a supplies scientist or an aerospace engineer as a result of the maths is all shared. When you communicate differential equations you may work in these areas, however if you begin speaking genome-wide affiliation research, there’s an entire totally different vernacular.” Moreover the life sciences staff, there may be an AI knowledge staff, a visualization group, and specialists who construct out interfaces corresponding to gateways and portals.

As for the customers, scientists from some 450 establishments worldwide use TACC machines. The middle is primarily funded by the Nationwide Science Basis (NSF), which helps open science anyplace in the US. Some 350 universities within the U.S. use TACC, however since science is commonly international, it’s ceaselessly the case {that a} U.S. challenge lead can have collaborators abroad. Many international collaborators are in Europe and Japan. As a result of TACC is funded principally by the NSF, scientists in sure nations will not be allowed to make use of TACC machines.

Stanzione says the various nature of supercomputing is what attracts many to the sphere. “I’m {an electrical} engineer by coaching, however I get to play every kind of scientists throughout an everyday day,” he says. “As we are saying, we do astronomy to zoology.” Historically, supercomputers have been used most in supplies engineering and chemistry. However because the acquisition of digital knowledge has gotten cheaper in latest many years, TACC has seen an inflow of life sciences work. “Genomics, proteomics, the imagery from MRI, FMRI, cryoEM [cryogenic electron microscopy]—all of those methods that create big quantities of digital knowledge more and more cheaply imply you want huge computer systems to course of and analyze it,” Stanzione says.

The upshot of all this knowledge flowing in for in regards to the final seven years has been the emergence of AI to construct statistical fashions to investigate it. “That’s basically what AI is,” Stanzione says. “That’s most likely probably the most thrilling pattern of the previous couple of years: taking all of the science we’ve performed and bringing AI into how that works.

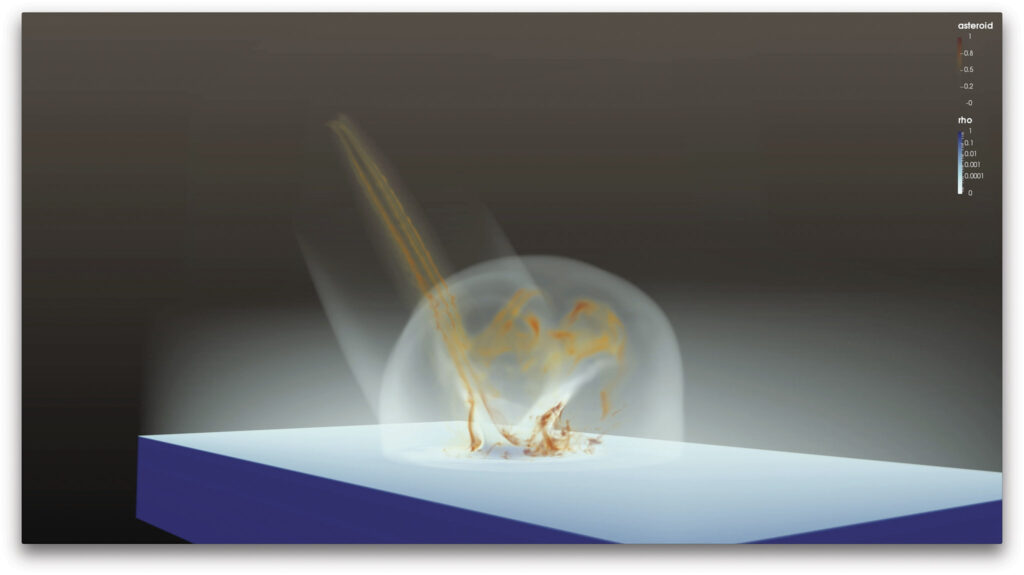

“We’re really changing elements of physics with what we’re calling surrogate fashions so we will do a quicker scan in an area of parameters, simulate a doable hurricane, simulate each doable molecule for drug discovery. We scanned billions of molecules for interactions with COVID, each doable compound, and we used AI to speed up that.”

The machines additionally tackle different initiatives, together with ramping up for each huge pure catastrophe. “If there’s a hurricane within the gulf, odds are we’re [devoting] tens of 1000’s of cores … to hurricane forecasting,” Stanzione says. “[In the spring] we’ve a challenge with our neighbors up in Oklahoma who’re doing storm chasing. We run quick forecasts of extreme storms to allow them to ship the twister chasers out in the fitting path at 4 a.m. each morning. In response to earthquakes, we do numerous work across the infrastructure in response to pure hazards.” New earthquake-resistant constructing codes are the results of simulation knowledge.

TACC methods have been utilized in work leading to a number of Nobel Prizes. TACC machines had been among the many ones answerable for analyzing the info resulting in the 2015 discovery by the Laser Interferometer Gravitational-Wave Observatory (LIGO) at Caltech and MIT of gravitational waves, created by the collision of two black holes. Albert Einstein predicted the existence of gravitational waves in 1916.

TACC additionally supplied assets to the Giant Hadron Collider in Switzerland. In 2012, the collider found the subatomic particle referred to as the Higgs boson, confirming the existence of the Higgs area, which provides mass to different elementary particles corresponding to electrons and quarks. “Virtually on daily basis, there was knowledge coming off the colliders to take a look at,” Stanzione recollects. “Some days I get to be a biologist. Some days I’m a natural-hazards engineer. Some days we’re speaking about astrophysics. Some days we’re discovering new supplies for higher batteries.”

Video screens close to the Information Heart present a pattern of labor TACC machines are doing: a research of the containment of plasma for fusion, what occurred after the Japanese earthquake and tsunami of 2011, a binary star merger, a simulation of a meteor hitting within the ocean off Seattle, extreme storm formations, ocean currents across the Horn of Africa, a DNA helix in a drug discovery utility, stitching collectively the colour graphic and all the pictures from a few of the first James Webb House Telescope knowledge being labored on at UT. “We’ve an limitless number of stuff that we get to do,” Stanzione says.

As knowledge has gotten cheaper, the social sciences now are shifting in to take benefit. “We’ve folks instrumenting dancers with sensors throughout their our bodies who we retailer knowledge for, issues we by no means did 20 years in the past,” Stanzione says. In keeping with him, there’s most likely not a division on the College that doesn’t have a tie to excessive efficiency computing.

In July, the Nationwide Science Basis introduced that, after a few years of funding TACC’s supercomputers one system at a time, TACC would develop into a Management-Class Computing Facility. Stanzione calls it “an enormous step ahead for the NSF and computing,” and says they’ve been within the formal planning course of for this for seven years and informally concerned for 25 or 30 years. Shifting TACC to what the NSF, in its understated method, phrases “the foremost amenities account” places it in an elite membership of establishments such because the aforementioned Giant Hadron Collider, LIGO, and a number of other monumental telescopes, “issues that run over many years.”

“From a scientific-capability perspective, we’re leaping an order of magnitude up within the measurement of computer systems we’re going to have, the scale of the storage methods, and extra staffing,” Stanzione says. As talked about, TACC is constructing a brand new knowledge heart in Spherical Rock simply to accommodate its subsequent supercomputer, Horizon. The brand new knowledge heart, which the federal authorities is paying to customise, shall be managed by a non-public knowledge heart firm. TACC will stay on the Pickle Campus.

Horizon, coming on-line in 2026, would be the largest educational supercomputer devoted to open-scientific analysis within the NSF portfolio, offering 10 instances the efficiency of Frontera, the present NSF leadership-class computing system. For AI purposes, the leap ahead shall be greater than 100-fold over Frontera. SDC Austin will function the ability. Upon completion, the campus can have a vital capability of greater than 85 megawatts and a footprint of 430,000 sq. toes.

Apart from a quantum leap in functionality, there may be additionally a consistency and sustainability side to the brand new NSF designation. Particular person computer systems constructed with grant funding have a helpful life of 4 to 5 years. “It’s like shopping for a laptop computer—they age out,” Stanzione explains. “When you’re partnering with one in all these huge telescopes just like the Vera Rubin Observatory that’s going to function for 30 years, there’s an enormous danger for them if their data-processing plan depends on us and we’ve a four-year grant to run a machine. Now, we’re going to decade-scale operational plans with occasional refreshes to the {hardware} of the machine, which suggests we could be a extra dependable associate for the opposite giant issues occurring in science.”

Ecological work requires observations over many years. Astronomy work requires knowledge to persist for a very long time. In a present of management in that space, TACC just lately despatched a staff to Puerto Rico to retrieve knowledge from the Arecibo Observatory, lengthy the world’s largest single-aperture telescope, earlier than it shuts down. They got here again with pallets of tapes with petabytes of information which were collected since 1964 there. “When you’re making a billion-dollar funding in an instrument, you’re going to wish to have each the processing functionality and the info sustainability round that instrument,” Stanzione says. “For me, the first-order thrilling factor is huge new computer systems. That’s what drives us to do stuff. However for the nationwide scientific enterprise, it’s constant, sustainable knowledge.”

Addressing AI inside the context of supercomputing is mainly redundant. “AI and the supercomputing infrastructures have converged,” Stanzione says. “We’ve been constructing numerous human experience supporting and scaling giant AI runs, and now with the brand new facility shall be constructing out extra capability to completely host AI fashions for inference, so you may construct a dependable service to tag a picture or determine a molecule or get an AI inference on the most definitely observe of our hurricane or issues like that.”

He provides, “We’re the place numerous these methods got here from, and we stay up for partnering with extra AI customers across the campus as we each perceive what it will probably do, find out how to use it responsibly, and find out how to reduce the vitality it takes to run these huge fashions. These are all core issues for us, and that’s most likely going to be a giant development space for us in years to return.”

CREDITS: Courtesy of the College; courtesy of TACC; courtesy of the College (2); courtesy of TACC (2)